RESEARCH

THE CAUSAL EFFECTS OF POLITICAL INCIVILITY IN SOCIAL MEDIA DISCUSSIONS (2025)

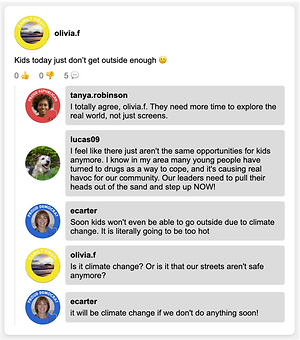

Political discussions serve many important functions in democratic societies, but increasingly occur on social media where incivility pervades. How does incivility in online political discussions impact conversational dynamics and outcomes? Using a novel research platform, we perform a preregistered experiment testing incivility’s causal effects in online political discussions. Participants use a mobile application to access a social media feed manipulated to vary in political incivility, with discussions driven by synthetic users powered by GPT-4. Participants experiencing the politically uncivil feed reported less comfort sharing views and were less likely to comment on political posts but paradoxically created more original posts. Their content also contained more hostile features such as profanity and insults. Furthermore, exposure to political incivility led to more negative views of out-party voters while in-party ratings remain unchanged. These findings highlight how uncivil political discussions on social media simultaneously discourage open expression while fueling hostility.

Read the pre-print here.

A SOCIOLOGICAL TURING TEST: SOCIAL IDENTITY CUES SHAPE WHO IS CLASSIFIED AS HUMAN ONLINE (2025)

The rise of generative artificial intelligence systems complicates our ability to distinguish real people from machine imitators, or “bots.” Drawing upon social psychology, symbolic interactionism, and the sociology of science and technology, we develop a theoretical framework for a “Sociological Turing Test” to explain how social identity cues influence how people differentiate AI from humans. To evaluate our hypotheses we present a pre-registered online experiment with 1,160 respondents who were exposed to social media accounts with varied visual cues about their race, gender, and partisanship. We find that people from dominant social groups are more likely to classify members of minority groups as AI bots—however, our results also show that members of some minority groups are more likely to classify each other as AI bots. These findings suggest existing social hierarchies extend into human-AI interaction, creating a new burden for marginalized groups in online settings and complicating the processes of social identity construction more broadly. Our research thus contributes to an emerging sociology of generative artificial intelligence by revealing how technological disruption challenges ontology and inter-group relations and introduces new methods to study this process in online settings.

Read the pre-print here.

EXPERIMENTS OFFERING SOCIAL MEDIA USERS THE CHOICE TO AVOID TOXIC POLITICAL CONTENT (2025)

Amid concerns that social media algorithms amplify toxic content, platforms are experimenting with giving users more control over their exposure. This study explores how promises of algorithmic control influence user satisfaction and content evaluation. We found that offering users the option to avoid toxic political content increased platform satisfaction. However, those who chose this filtering option rated posts as more hostile than those without the choice, even though all respondents, regardless of choice or experimental condition, viewed the same content. A follow-up experiment revealed that exposure to only positive content did not reduce hostility ratings. Rather, users exposed only to positive posts rated them as more hostile compared to those in our original experiment who saw both positive and negative content. These findings challenge the assumption that user autonomy will improve content experiences. Instead, algorithmic choice raises expectations, prompting users to scrutinize content more critically or attempt to “train” the algorithm to align with their preferences. Platforms must consider how expectations, not just content exposure, shape online experiences.

Read the pre-print here.

DESIGNING SOCIAL MEDIA TO PROMOTE PRODUCTIVE POLITICAL DIALOGUE ON A NEW RESEARCH PLATFORM (2024)

Social media is often blamed for deepening ideological divides, but can it instead foster productive, open-minded dialogue? To explore this, we recruited 1,043 Americans to use the Polarization Lab's Social Media Accelerator (SMA). Participants were randomly assigned to spend ten minutes on one of two versions of the platform: one where participants saw users earning "popular user" badges for receiving frequent likes and another where participants saw users earning "open minded" badges for engaging respectfully with opposing viewpoints. We found that participants in the "open minded" group used more intellectually humble language and reported feeling more positive emotions. These results offer new insights into social media's potential to shape dialogue and introduce innovative methods for conducting randomized controlled trials using generative AI.

Read the pre-print here.

OUTNUMBERED ONLINE: THE CONSEQUENCES OF PARTISAN IMBALANCE IN ONLINE POLITICAL DISCUSSION (2024)

Much research on online political discussions has focused on social media "echo chambers" of like-minded individuals, but less is known about how people respond to online environments dominated by those who are politically dissimilar. To explore this, we conducted an experiment using the Polarization Lab’s Social Media Accelerator (SMA), a platform that allows for controlled manipulation of social media features. The app mimics a social media platform and employs AI-powered synthetic users to create a dynamic content feed. We found that engaging in a discussion with mostly out-partisans reduced participants' comfort with sharing opinions and lowered their evaluations of the platform and its users. These findings shed light on the relatively less explored online setting of being outnumbered by opposing views and demonstrate the utility of the SMA in social science research.

Read the pre-print here.

REDUCING POLITICAL POLARIZATION IN THE UNITED STATES WITH A MOBILE CHAT PLATFORM (2023)

Do anonymous online conversations between people with different political views exacerbate or mitigate partisan polarization? We created a mobile chat platform to study the impact of such discussions. Our study recruited Republicans and Democrats in the United States to complete a survey about their political views. We later randomized them into treatment conditions where they were offered financial incentives to use our platform to discuss a contentious policy issue with an opposing partisan. We found that people who engage in anonymous cross-party conversations about political topics exhibit substantial decreases in polarization compared with a placebo group that wrote an essay using the same conversation prompts. Moreover, these depolarizing effects were correlated with the civility of dialogue between study participants. Our findings demonstrate the potential for well-designed social media platforms to mitigate political polarization and underscore the need for a flexible platform for scientific research on social media.

Read the article, published in Nature Human Behavior, here.

PERCEIVED GENDER AND POLITICAL PERSUASION: A SOCIAL MEDIA FIELD EXPERIMENT DURING THE 2020 US DEMOCRATIC PRIMARY ELECTION (2023)

Women have less influence than men in a variety of settings. Does this result from stereotypes that depict women as less capable, or biased interpretations of gender differences in behavior? We present a field experiment that—unbeknownst to the participants—randomized the gender of avatars assigned to Democrats using a social media platform we created to facilitate discussion about the 2020 Primary Election. We find that misrepresenting a man as a woman undermines his influence, but misrepresenting a woman as a man does not increase hers. We demonstrate that men’s higher resistance to being influenced—and gendered word use patterns—both contribute to this outcome. These findings challenge prevailing wisdom that women simply need to behave more like men to overcome gender discrimination and suggest that narrowing the gap will require simultaneous attention to the behavior of people who identify as women and as men.

Read the article here.

BREAKING THE SOCIAL MEDIA PRISM: HOW TO MAKE OUR PLATFORMS LESS POLARIZING (2021)

Breaking the Social Media Prism, by the lab’s Founding Director Chris Bail, challenges common myths about echo chambers, foreign misinformation campaigns, and radicalizing algorithms, revealing that the solution to political tribalism lies deep inside ourselves. The book draws on innovative online experiments and in-depth interviews with social media users across the political spectrum to explain why stepping outside of our echo chambers can make us more polarized, not less. Wherever you stand on the spectrum of user behavior and political opinion, Bail offers solutions to counter political tribalism from the bottom up and the top down.

Read more about the book here.

ASSESSING THE RUSSIAN INTERNET RESEARCH AGENCY'S IMPACT ON THE POLITICAL ATTITUDES AND BEHAVIORS OF U.S. TWITTER USERS IN LATE 2017 (2020)

There is widespread concern that Russia and other countries have launched social-media campaigns designed to increase political divisions in the United States. Though a growing number of studies analyze the strategy of such campaigns, it is not yet known how these efforts shaped the political attitudes and behaviors of Americans. We study this question using longitudinal data that describe the attitudes and online behaviors of 1,239 Republican and Democratic Twitter users from late 2017 merged with nonpublic data about the Russian Internet Research Agency (IRA) from Twitter. Using Bayesian regression tree models, we find no evidence that interaction with IRA accounts substantially impacted 6 distinctive measures of political attitudes and behaviors over a 1-mo period. We also find that interaction with IRA accounts were most common among respondents with strong ideological homophily within their Twitter network, high interest in politics, and high frequency of Twitter usage. Together, these findings suggest that Russian trolls might have failed to sow discord because they mostly interacted with those who were already highly polarized. We conclude by discussing several important limitations of our study—especially our inability to determine whether IRA accounts influenced the 2016 presidential election—as well as its implications for future research on social media influence campaigns, political polarization, and computational social science.

Read the article here. This research was funded by the National Science Foundation and Duke University.

EXPOSURE TO COMMON ENEMIES CAN INCREASE POLITICAL POLARIZATION: EVIDENCE FROM A COOPERATION EXPERIMENT WITH AUTOMATED PARTISANS (2020)

Longstanding theory indicates the threat of a common enemy can mitigate conflict between members of rival groups. We tested this hypothesis in a pre-registered experiment where 1,670 Republicans and Democrats in the United States were asked to complete a collaborative online task with an automated agent or ``bot" that was labelled as a member of the opposing party. Prior to this task, we exposed respondents to primes about a) a common enemy (involving threats from Iran, China, and Russia); b) a patriotic event; or c) a neutral, apolitical prime. Though we observed no significant differences in the behavior of Democrats as a result of these primes, we found that Republicans—and particularly those with very strong conservative views—were significantly less likely to cooperate with Democrats when primed about a common enemy. We also observed lower rates of cooperation among Republicans who participated in our study during the 2020 Iran crisis, which occurred in the middle of our fieldwork. These findings indicate common enemies may not reduce inter-group conflict in highly polarized societies, and contribute to a growing number of studies that find evidence of asymmetric political polarization. We conclude by discussing the implications of these findings for research in social psychology, political conflict, and the rapidly expanding field of computational social science.

Read the pre-print here. This research was funded by the Russell Sage Foundation and Duke University

EXPOSURE TO OPPOSING VIEWS CAN INCREASE POLITICAL POLARIZATION: EVIDENCE FROM A LARGE-SCALE FIELD EXPERIMENT ON SOCIAL MEDIA (2018)

There is mounting concern that social media sites contribute to political polarization by creating "echo chambers" that insulate people from opposing views about current events. We surveyed a large sample of Democrats and Republicans who visit Twitter at least three times each week about a range of social policy issues. One week later, we randomly assigned respondents to a treatment condition in which they were offered financial incentives to follow a Twitter bot for one month that exposed them to messages produced by elected officials, organizations, and other opinion leaders with opposing political ideologies. Respondents were re-surveyed at the end of the month to measure the effect of this treatment, and at regular intervals throughout the study period to monitor treatment compliance. We find that Republicans who followed a liberal Twitter bot became substantially more conservative post-treatment, and Democrats who followed a conservative Twitter bot became slightly more liberal post-treatment. These findings have important implications for the interdisciplinary literature on political polarization as well as the emerging field of computational social science.

Read the article here. This research was funded by the National Science Foundation, the Russell Sage Foundation, the Carnegie Foundation, the Guggenheim Foundation, and Duke University.

USING INTERNET SEARCH DATA TO EXAMINE THE RELATIONSHIP BETWEEN ANTI-MUSLIM AND PRO-ISIS SENTIMENT IN U.S. COUNTIES (2018)

Recent terrorist attacks by second or third-generation immigrants in the United States and Europe indicate radicalization may result from the failure of ethnic integration—or the rise of inter-group prejudice in communities where “home-grown'' extremists are raised. Yet such community-level drivers are notoriously difficult to study because public opinion surveys provide biased measures of both prejudice and radicalization. We examine the relationship between anti-Muslim and pro-ISIS internet searches in 3,099 U.S. counties between 2014 and 2016 using instrumental variable models that control for various community-level factors associated with radicalization. We find anti-Muslim searches are strongly associated with pro-ISIS searches—particularly in communities with high levels of poverty and ethnic homogeneity. Though more research is needed to verify the causal direction of this relationship, this finding suggests minority groups may be more susceptible to radicalization if they experience discrimination in settings where they are isolated and therefore highly visible—or in communities where they compete with majority group members for limited financial resources. We evaluate the validity of our measures using several other data sources and discuss the implications of our findings for the study of terrorism and inter-group relations as well as immigration and counter-terrorism policies.

Read the article here. This Research was funded by Duke University.

CHANNELLING HEARTS AND MINDS: ADVOCACY ORGANIZATIONS, COGNITIVE-EMOTIONAL CURRENTS, AND PUBLIC CONVERSATION (2017)

Do advocacy organizations stimulate public conversation about social problems by engaging in rational debate, or by appealing to emotions? We argue that rational and emotional styles of communication ebb and flow within public discussions about social problems due to the alternating influence of social contagion and saturation effects. These “cognitive-emotional currents” create an opportunity structure whereby advocacy organizations stimulate more conversation if they produce emotional messages after prolonged rational debate or vice versa. We test this hypothesis using automated text-analysis techniques that measure the frequency of cognitive and emotional language within two advocacy fields on Facebook over 1.5 years, and a web-based application that offered these organizations a complimentary audit of their social media outreach in return for sharing nonpublic data about themselves, their social media audiences, and the broader social context in which they interact. Time-series models reveal strong support for our hypothesis, controlling for 33 confounding factors measured by our Facebook application. We conclude by discussing the implications of our findings for future research on public deliberation, how social contagions relate to each other, and the emerging field of computational social science.

Read the article here. This research was funded by the National Science Foundation

CULTURAL NETWORKS AND BRIDGES: HOW ADVOCACY ORGANIZATIONS STIMULATE PUBLIC CONVERSATION ON SOCIAL MEDIA (2016)

Social media sites are rapidly becoming one of the most important forums for public deliberation about advocacy issues. However, social Scientists have not explained why some advocacy organizations produce social media messages that inspire far-ranging conversation among social media users, but the vast majority of them receive little or no attention. I argue that advocacy organizations are more likely to inspire comments from new social media audiences if they create “cultural bridges,” or produce messages that combine conversational themes within an advocacy field that are seldom discussed together. I use natural language processing, network analysis, and a social media application to analyze how cultural bridges shaped public discourse about autism spectrum disorders on Facebook over the course of 1.5 y, controlling for various characteristics of advocacy organizations, their social media audiences, and the broader social context in which they interact. I show that organizations that create substantial cultural bridges provoke 2.52 times more comments about their messages from new social media users than those that do not, controlling for these factors. This study thus offers a theory of cultural messaging and public deliberation and computational techniques for text analysis and application-based survey research.

Read the article here. This research was funded by the National Science Foundation.

Check out our white papers on intellectual humility and our novel research platform, the Social Media Accelerator.

For a bird's eye view of the literature on social media and political dysfunction, see this document Chris co-authored with John Haidt, or this New Yorker Article that describes how a range of experts reacted to this effort.

For a bird's eye view of polarization in the United States, check out this article Chris co-authored with a team of leading scholars in Science.

To learn what depolarization strategies work, see this new article that Chris co-authored with another group of experts in Nature Human Behavior.

Q: Who Funds the Polarization Lab?

A: The Polarization Lab was created using a grant from the Duke Provost’s Office. Our research has been funded by the John Templeton Foundation, the National Science Foundation, the Russell Sage Foundation, and the Guggenheim and Carnegie Foundations. Our 2021 study that analyzes the role of anonymity and polarization using our scientific platform for social science research was funded by Duke and partially funded by an unrestricted grant from Facebook’s Integrity Foundational Research Program. Facebook does not provide any compensation to the directors of the lab. To learn more about the type of grant we received from Facebook, visit this link.

INTERESTED IN LEARNING MORE?